Latency is the time it takes for data to reach its destination and back. Video latency is the unseen delay when transferring a single frame from the camera to end-users, or the delay that happens between an action taking place and it being displayed on a screen. Generally, content producers are aiming for the lowest latency possible without sacrificing quality..

What delays increase streaming latency?

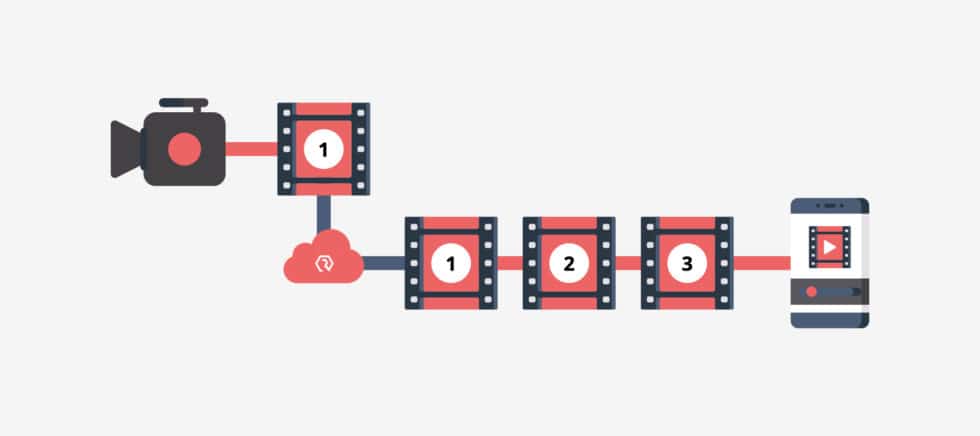

During the travel period from camera to screen, there are numerous steps along the way where delays can be introduced, from encoding and uploading the video content to an over-the-top (OTT) video platform to cloud transcoding and video delivery.

Encoding and compression delays

During the first stage of a livestream, the video content is usually compressed to reduce the amount of data that needs to be transmitted to an online video platform or other video delivery software. This encoding process, often called the first mile, can contribute to video latency depending on the type of encoder used.

In general, hardware encoders have higher encoding speeds and lead to lower latency than software encoders because they’re devices that are purpose-built for encoding.

Streaming protocol delays

The streaming protocol used can also have an enormous impact on latency. HTTP Live Streaming (HLS) and Dynamic Adaptive Streaming of HTTP (MPEG-DASH) are the two main protocols used for live streaming because they deliver video content over HTTP and are compatible with most devices.

More recently, both protocols have developed low latency updates that are based on the MPEG Common Media Application Format (CMAF).

Lack of a CDN

In addition, content delivery networks (CDNs) can help reduce latency by leveraging thousands of servers distributed around the world. A CDN can shorten last mile delivery by routing video content through its network using the fastest path possible, which can significantly reduce latency, buffering, and other issues. CDNs also help to distribute the load amongst a larger number of streaming servers for large scale broadcasts

Playback latency

Finally, latency can be introduced during playback because the viewer’s internet bandwidth and device processing power impact how fast the stream can be downloaded. That’s why many broadcasters offer streams at multiple bitrates and leverage delivery protocols that support adaptive bitrate streaming (ABS). This approach allows video players to choose a video bitrate that maximizes quality without leading to buffering or high latency.

How Resi minimizes video latency

Resi uses a combination of robust hardware encoders and cutting-edge scalable cloud infrastructure to ensure optimized low-latency video processing. While low-latency streams are sometimes important in mission-critical situations where as close to real time is necessary, Resi’s Resilient Streaming Protocol (RSP) takes a different approach. By introducing a short delay, complete and error-free audio and video delivery can be confidently delivered over unpredictable networks or even wireless cellular hotspots.Resi’s Livestream Platform will then stream video to any device using low-latency protocols like HLS and MPEG-DASH. Our cloud-based streaming platform also includes modern capabilities like cloud transcoding and ABS for a complete end-to-end solution. With Resi, broadcasters have easy-to-use tools for quickly delivering ultra-reliable livestreams at scale.